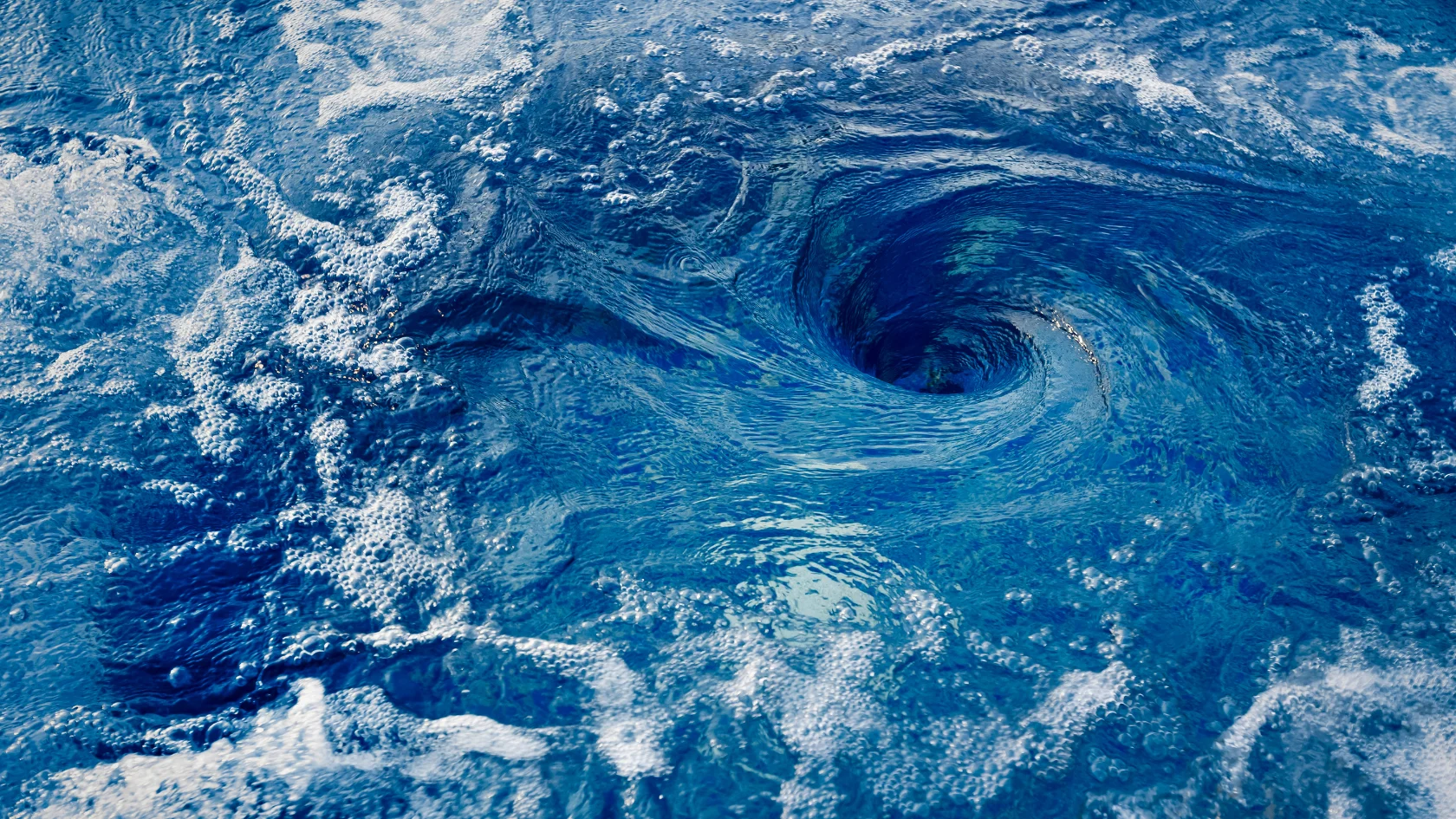

When most people think about AI infrastructure, they focus on chips and electricity. But there’s another vital input that’s quietly becoming a limiting factor: water.

Modern data centers — especially those running AI workloads — consume millions of gallons of water every year to keep servers cool. As the demand for AI continues to grow, so does the need for cooling systems that can handle the heat without draining local water supplies.

This article breaks down why water use is becoming a major concern in AI infrastructure, how companies are responding, and what this means for sustainability and regional planning.

Why AI Data Centers Use So Much Water

High-performance computing (HPC) environments — like those used to train large language models — generate significant heat. To maintain safe operating conditions, these facilities require constant cooling.

Many data centers use evaporative cooling, which is more energy-efficient than traditional air conditioning but far more water-intensive. In these systems:

- Water is used to absorb heat and then evaporates into the atmosphere

- This process can consume millions of gallons per year, especially in hotter climates

- Water use increases further during peak demand or heatwaves

As AI models get bigger and more companies rush to adopt them, water usage per facility is climbing.

The Numbers Behind the Concern

- A single hyperscale data center can use 1–5 million gallons of water per day

- Microsoft reported that its global water use grew by over 34 percent in 2022, largely due to increased AI workloads

- Some estimates suggest training one large AI model can consume the equivalent of 500,000 liters of clean water, depending on hardware, cooling, and location

While these figures vary by setup and efficiency, the trend is clear: AI requires water, and the scale is growing fast.

Regional Tensions Are Already Emerging

Water scarcity is a growing issue across parts of the U.S., particularly in the Southwest and Midwest. As data center expansion accelerates, local governments are beginning to scrutinize how much water tech companies use — and whether it benefits the broader community.

Recent concerns include:

- Drought-stricken counties weighing moratoriums on new data centers

- Environmental impact reviews delaying or blocking new buildouts

- Public backlash over transparency around corporate water consumption

These tensions aren’t just about resources — they’re also about who gets priority access to critical infrastructure.

How the Industry Is Responding

Major cloud and AI providers are aware of the issue and are starting to act:

- Google and Microsoft have committed to water-positive operations by 2030

- Closed-loop cooling systems are being piloted to reduce water loss

- Some companies are locating data centers near recycled water sources or using non-potable water for cooling

Still, these solutions require investment, planning, and coordination with municipalities — and many smaller providers may not be equipped to follow suit.

Final Thoughts

The future of AI doesn’t just depend on algorithms and semiconductors. It also depends on how responsibly we manage the resources that keep those systems running — including water.

As demand for AI services climbs, water will become a strategic constraint in infrastructure planning. Companies that ignore it risk public backlash, regulatory friction, and sustainability setbacks. Those that innovate around it will have a long-term advantage.

The AI boom may be powered by code, but it’s cooled by water.